In the early days of data architecture, the answer to every question was a SQL query. If you needed to know your monthly recurring revenue or find a customer's email, you hit a relational database. It was precise, structured, and reliable.

But as we move into the era of Unstructured Data and LLMs, a new challenger has emerged: Data Chunking.

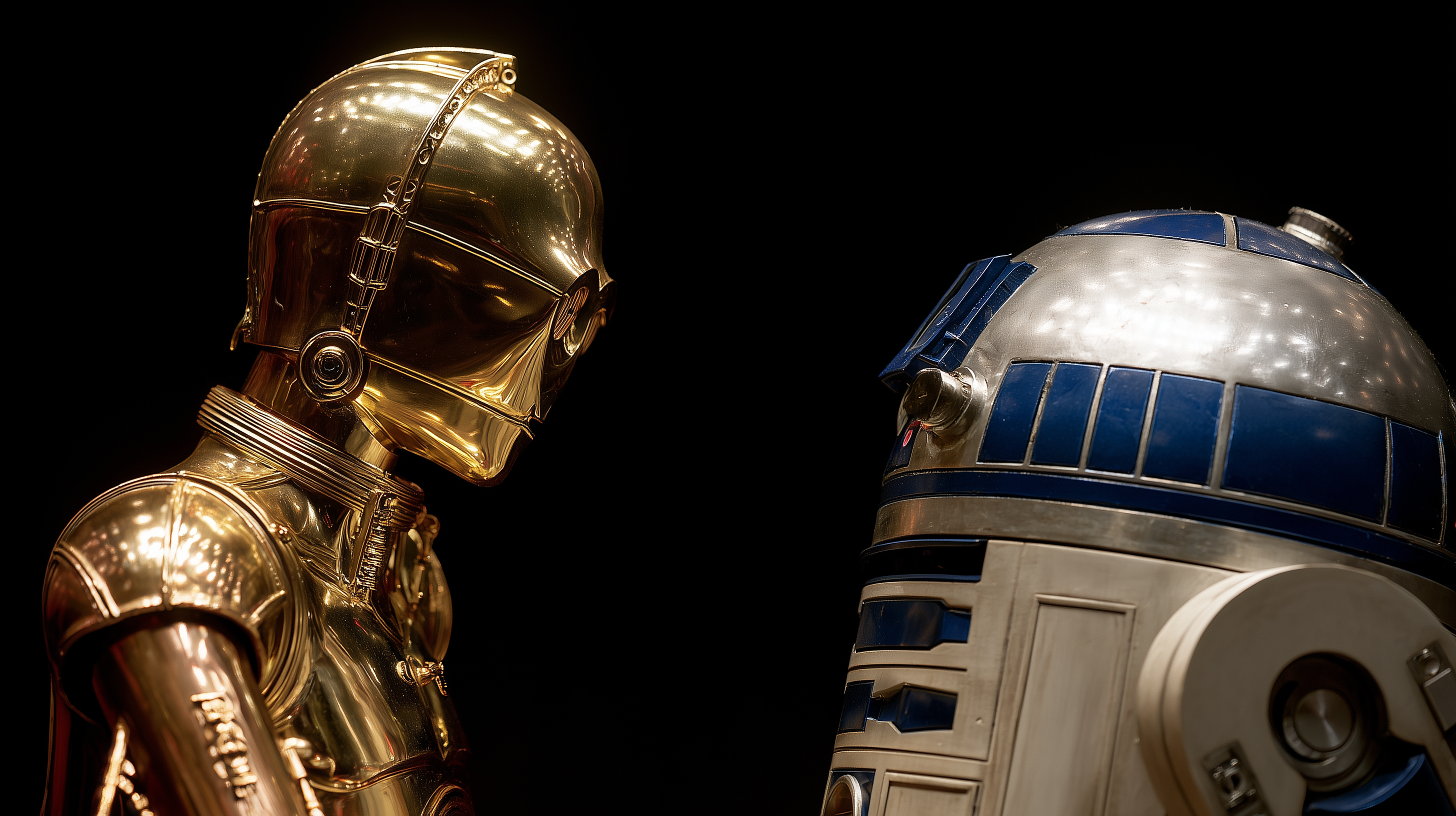

This is the shift from "Exact Match" to "Semantic Similarity." To understand the difference, look no further than the most famous droid duo in the galaxy.

The "C-3PO vs. R2-D2" Analogy

SQL is C-3PO: He is a protocol droid. He knows over six million forms of communication, but he is a stickler for the rules. If you don't give him the exact coordinates or the right etiquette, he'll tell you the "odds of failure" are 3,720 to 1. He is your Source of Truth for facts, etiquette, and structured translation.

Chunking is R2-D2: R2 doesn't talk in structured sentences; he beeps in "vectors." He can plug into any messy interface, bypass security, and find the specific holographic recording of Princess Leia you need, even if you don't know the file name. He navigates the unstructured "noise" of the Imperial network to find the "signal" that saves the day.

The Takeaway: You need C-3PO to keep your data "Golden" and compliant, but you need R2-D2 to go into the trenches and retrieve the context.

1. The Philosophy: Structure vs. Meaning

The fundamental difference between these two approaches is how they "see" your data.

SQL Querying (The "Librarian" Approach)

SQL treats data like a perfectly organized filing cabinet. Every piece of information has a specific home (a column) and a specific format (a data type).

- The Goal: Absolute precision.

- The Mechanism: Logical filtering (A = B).

Data Chunking (The "Context" Approach)

Chunking is the process of breaking long-form text—PDFs, documentation, or transcripts—into smaller, digestible pieces. These pieces are then converted into "embeddings" (mathematical vectors) that represent the meaning of the text.

- The Goal: Relevance and nuance.

- The Mechanism: Mathematical proximity in a multi-dimensional space.

2. Layering the Logic: When to Use Which

To build a scalable platform, you shouldn't choose just one. You need to understand where they sit in your "Layer Cake" of data retrieval.

The Case for SQL: The "Single Source of Truth"

If you are dealing with numbers, dates, or strict categories, SQL is non-negotiable.

- Pros: It provides "Unit Tests" for reality. If you ask for revenue from 2023, you get a calculation, not a "guess."

- Cons: It is context-blind. If a user searches for "troubleshooting login issues" but your database only has a column for

error_code, SQL won't bridge that gap.

The Case for Chunking: The "Logic Engine" for AI

When you're building RAG (Retrieval-Augmented Generation) systems, you use chunking to feed the LLM.

- Pros: It handles "Spaghetti Data." You can dump 1,000 messy text files into a vector store, and the system will find the most relevant paragraphs based on a conversational question.

- Cons: It can suffer from "The Lost in the Middle" problem. If your chunks are too small, they lose the surrounding context; if they are too large, the "signal" gets lost in the "noise."

3. Comparison: Precision vs. Flexibility

| Feature | SQL Querying | Data Chunking (Vector) |

|---|---|---|

| Data Type | Structured (Tables) | Unstructured (Text/Images) |

| Output | Exact Records | Relevant Snippets |

| Search Style | Keywords & Logic | Semantic Meaning & Intent |

| Scaling | Scales with Rows/Indexes | Scales with Embedding Dimensions |

4. The "Unit Tests" of Retrieval

In a SQL world, a "test" ensures a value isn't null. In a Chunking world, a "test" ensures Retrieval Accuracy.

When you chunk data, you have to tune your strategy like a software engineer:

- Chunk Size: How many characters per piece? (e.g., 500 tokens).

- Overlap: How much context do we carry over from the previous chunk to ensure no logic is cut in half?

- Metadata Tagging: Even in a "chunked" world, we often attach SQL-like metadata (e.g.,

created_at,author) to the chunks to allow for hybrid filtering.

5. The Staging-to-Vector Pipeline: Why "SQL First" Wins

If you treat your vector database like a junk drawer, your LLM will give you junk answers. To fix this, we apply the same Layer Cake logic from the dbt world to our AI data.

Layer 1: The SQL Filter (The "Bouncer")

Before a single sentence is chunked, it has to pass through a SQL-based Staging layer.

- Goal: Eliminate noise.

- What happens: We use SQL to filter out

deleted_atrecords, draft versions, and files under a certain character count. - Why: You don't want your AI citing a "Test Document" from 2019 as the current company policy.

Layer 2: The Metadata Anchor (The "ID Card")

While the text is still in your relational database (SQL), you attach "Metadata."

- Goal: Create a trail of breadcrumbs.

- What happens: You assign every row a

source_id,department_tag, andlast_updated_at. - Why: When the AI eventually finds a "chunk," it needs to know exactly which SQL row that chunk came from so it can verify the date or check user permissions.

Layer 3: The Chunking Engine (The "Transformation")

Now that the data is clean and tagged, we pull it from SQL and break it into chunks.

- Goal: Optimize for the LLM's "Context Window."

- What happens: We split a 50-page technical manual into 500-token pieces with a 10% overlap.

- Why: This ensures the "Logic Engine" (the LLM) gets a digestible bite of information without losing the surrounding context.

The Workflow: From Row to Vector

| Step | Action | Tool | Purpose |

|---|---|---|---|

| 1 | Clean & Filter | SQL | Ensure data is "Golden" and valid. |

| 2 | Tag Metadata | SQL | Attach IDs, dates, and security tiers. |

| 3 | Chunk & Embed | Python/Vector DB | Turn text into embeddings. |

| 4 | Hybrid Query | SQL + Vector | Use SQL to filter by "Dept" and Vector to find "Meaning." |

Final Verdict: Don't Build on Raw Sand

Starting with SQL isn't just "extra work"—it's Infrastructure.

By organizing your data in a relational database first, you create a "Source of Truth." When your AI model inevitably gets an upgrade, you don't have to re-clean your data. You just point the new model at your already-clean SQL tables and re-run the chunking.

In short: SQL is for your Organization; Chunking is for your Intelligence. You need the protocol of C-3PO to ensure the resourcefulness of R2-D2. You need the first to trust the second.