In the early days of data analysis, we were all "SQL Poets." We wrote 500-line queries that were beautiful, complex, and completely unmaintainable. Logic was buried in sub-queries, business rules were copy-pasted across five different dashboards, and if a column name changed in the database, the entire reporting infrastructure collapsed.

This is what we call "Spaghetti Analytics." It works for a team of one, but it breaks for a team of ten.

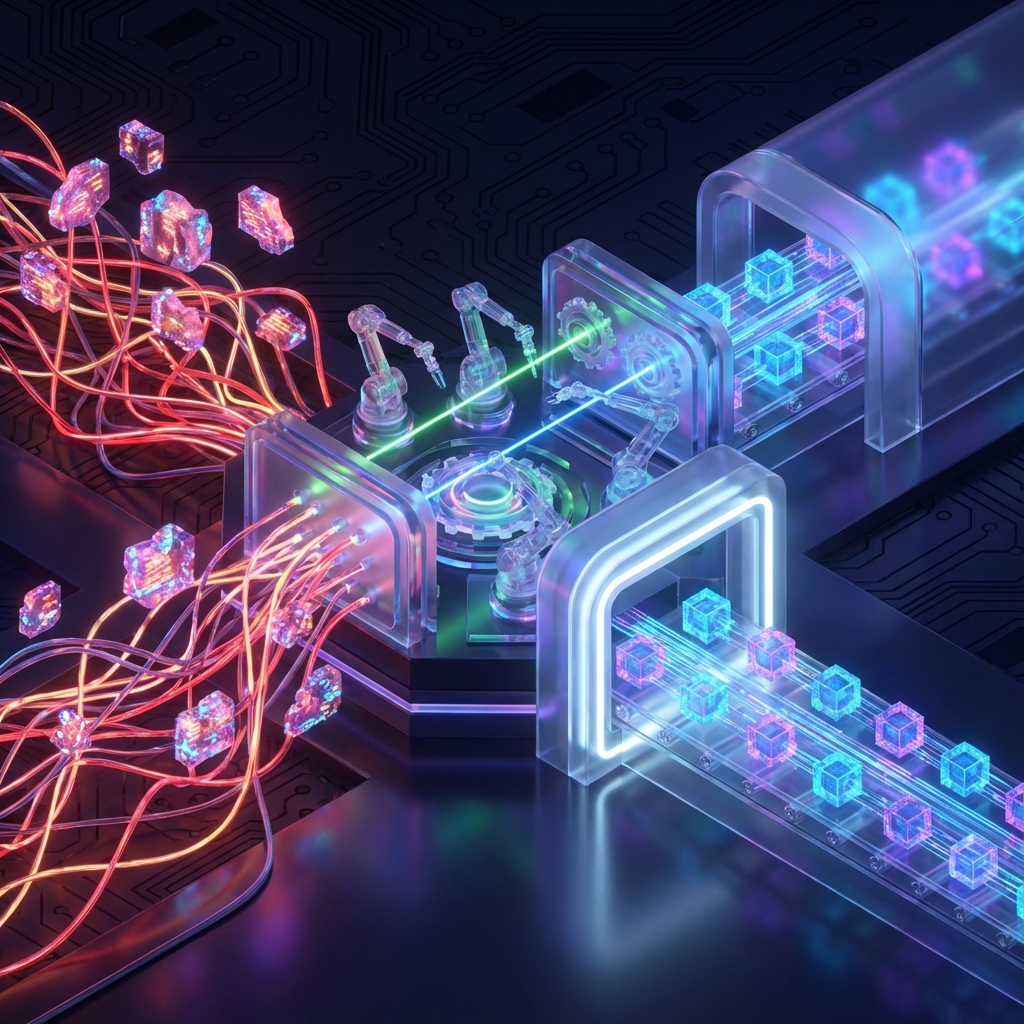

To build a modern, scalable tech stack, you need to stop treating SQL like a scripting language and start treating it like Software Engineering. That is what dbt (data build tool) does. It allows you to apply version control, modularity, testing, and documentation to your data pipeline.

Here is the architectural deep dive on how to structure a dbt project for a clean, organized, and actionable data stack.

1. The Philosophy: The "Layer Cake" Architecture

Stop writing one massive query that goes from raw_data to final_dashboard. That is a "Black Box." If the revenue number looks wrong, you have to peel back 15 layers of nested logic to find the error.

In dbt, we break transformations into three strict layers. This maximizes reusability and keeps your stack organized.

Layer 1: Staging (The "Cleaning Room")

Goal: 1-to-1 mapping with source tables.

Rule: No joins. No aggregations. Just cleaning.

This is the foundation. You take the raw, ugly database table and turn it into a clean building block that can be used by anyone in the organization.

- Rename columns: Change cryptic names like

c_idtocustomer_id. - Cast types: Turn the string "2024-01-01" into a real DATE object.

- Standardize: Turn 1/0 into true/false.

-- models/staging/stg_stripe__payments.sql

with source as (

select * from {{ source('stripe', 'charges') }}

),

cleaned as (

select

id as payment_id,

order_id,

-- Fix the units once, here, so no one else has to guess later.

amount / 100.0 as amount_usd,

created as created_at,

status

from source

)

select * from cleanedLayer 2: Intermediate (The "Logic Engine")

Goal: Complex joins and business logic.

Rule: This layer is never exposed to the end user (BI tools).

This is where the sausage is made. You join Payments to Orders. You calculate "Lifetime Value." By keeping this separate, you create reusable logic components. If you need to change how "Churn" is calculated, you change it here once, and it propagates everywhere.

Layer 3: Marts (The "Presentation Layer")

Goal: Wide, denormalized, easy-to-read tables.

Rule: One Big Table (OBT) organized by business concept.

This is the "API" for your business. When a Data Analyst opens Tableau or PowerBI, they shouldn't have to join five tables to answer a question. They should see a fct_orders table that already contains the customer name, product category, and shipping region. It makes self-service analytics actually possible.

2. The "Unit Tests" for Reality

In software engineering, code breaks because of bad logic. In data engineering, code breaks because of bad data.

- What if a Primary Key is suddenly NULL?

- What if a "Discount" column has a value of 200%?

- What if a "Status" code appears that you've never seen before?

dbt allows you to write tests that run every time you update your warehouse.

The "Yaml" Guardrails

You define your expectations in a simple text file. If the data violates these rules, the pipeline fails and alerts you before the bad data hits the CEO's dashboard.

version: 2

models:

- name: stg_stripe__payments

columns:

- name: payment_id

tests:

- unique

- not_null

- name: status

tests:

- accepted_values:

values: ['succeeded', 'failed', 'refunded']Why this is critical: Trust is hard to gain and easy to lose. If an executive sees a duplicate row or a null value once, they stop trusting the dashboard forever. Automated testing is your insurance policy against "Silent Failures."

3. The "Time Travel" Feature (Snapshots)

Most databases only store the current state of the world. If a customer moves from "New York" to "London" today, the database updates the record. The history is lost.

But next month, when you try to run a report on "Sales by Region for Last Quarter," that customer will wrongly show up in "London," skewing your historical data.

The Fix: dbt Snapshots

dbt implements "Slowly Changing Dimensions Type 2" automatically. It tracks changes in your raw data and creates a history table for you.

| id | city | valid_from | valid_to |

|---|---|---|---|

| 101 | New York | 2024-01-01 | 2024-02-15 |

| 101 | London | 2024-02-15 | NULL |

Now, your reporting is accurate regardless of when the query is run.

4. Lineage: The Map of Your Business

One of the hardest parts of managing a data stack is "Impact Analysis." If you drop the column referral_source from the users table, what breaks? Does the Marketing Dashboard break? Does the Monthly Finance Report fail?

Because dbt uses the {{ ref() }} function to link models, it automatically generates a dependency graph (Lineage).

You can see exactly which raw data feeds into which final model. You stop guessing and start engineering. You can confidently refactor code knowing exactly what downstream assets depend on it.

Examples in the Wild: The "One Big File" Trap

In my three years of managing and working with analytics engineers (specifically using dbt and Google BigQuery), I have observed a common and destructive pattern: The One Big File.

Team members frequently dump all logic into a single SQL file. It starts innocent—just joining a few tables—but typically balloons into a 500-line monster that handles cleaning, joining, aggregation, and formatting all at once.

The Problem: This monolithic approach makes Quality Assurance (QA) nearly impossible. When a number looks wrong, you have to debug the entire 500-line script to find the one line where a join fanned out or a filter was missed.

The Solution: Strict Layer Enforcement

By forcing the team to adhere to the Staging → Intermediate → Mart structure, we solved the "One Big File" problem.

- Streamlined QA: It makes it much easier to review other people's work because logic is compartmentalized. I can review the Staging layer just for cleaning logic, and the Intermediate layer just for join logic.

- Isolating Errors: We can predict where specific types of errors will occur. If a timestamp format is wrong, we know it's in Staging. If a "Total Revenue" sum is wrong, we know it's in Marts.

- Reducing Bugs: Clear separation generally prevents spaghetti code and ensures higher data quality. You can't accidentally use dirty data in a final report if the dirty data never leaves the Staging layer.

The Verdict: Engineering Over Scripting

If you are running your business on SQL scripts stored in a laptop folder, you are building a skyscraper on quicksand.

dbt forces you to treat data as a Product.

- Modularize (Don't repeat yourself)

- Test (Assert expectations)

- Version Control (Git for data logic)

This is the difference between a "Data Project" and a "Data Platform." It's about building a stack that is robust enough to handle the chaos of the real world.