Every company today wants to build AI, but few are willing to build the plumbing required to support it.

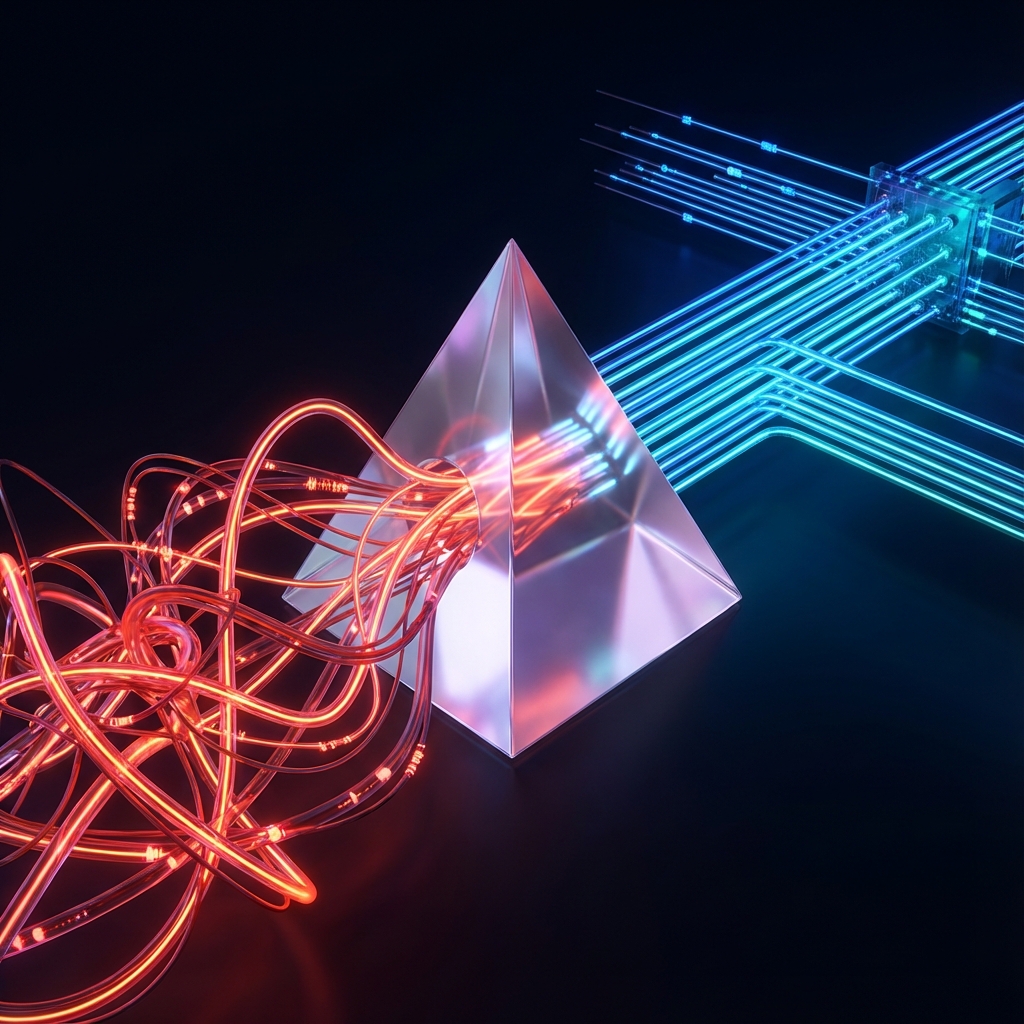

Most developers treat data preparation as a scripting task. They write a Python script to hit an API, grab a CSV, clean up a few typos, and shove it into a model. This is the "Spaghetti Architecture." It is brittle, it is unscalable, and it creates data that is technically "clean" but semantically useless.

You cannot run a Ferrari on sludge, and you cannot run a modern AI model on raw, normalized application data.

To get data that is truly "AI Ready," you need to move from a scripting mindset to an engineering mindset. You need a standard ELT (Extract, Load, Transform) pipeline. Here is the blueprint for cleaning up your data architecture using modern tools like dbt.

1. The "Manual Fetch" Trap (Ingestion Hygiene)

The first step is simply getting the data. The biggest mistake I see is engineers writing custom "connector" scripts (fetch_stripe_data.py) to pull data from their SaaS tools or databases.

The Problem: Custom scripts are the leading cause of "Silent Data Corruption."

- Drift: APIs change. If a pagination parameter changes in Salesforce, your script might silently drop 20% of the records.

- Temporal Gaps: If your script runs on a laptop and the laptop goes to sleep, you have data gaps.

The Fix: Stop writing ingestion scripts. Use a dedicated EL (Extract-Load) tool like Airbyte, Fivetran, or Meltano.

These tools are the "plumbing." They don't care what the data means; their only job is to ensure that if a record exists in your source system, it lands in your data warehouse (like Snowflake or BigQuery) exactly as it is, every single hour. You cannot clean data if you don't trust the delivery mechanism.

2. The "Foreign Key" Fog (Why Raw Data Fails)

Once the data is in your warehouse, you face a new problem. Application databases are designed for software, not intelligence. They use "3rd Normal Form"—meaning data is shattered across dozens of tables to avoid duplication.

Why it hurts AI: An AI model does not understand your database schema. It doesn't know that user_id: 992 refers to "John Doe." To an AI, "992" is just a random number. If you train a model on raw rows, you are training it on noise.

The Smell Test: Look at a single row of your training data.

{

"id": "ord_8823",

"u_id": 992,

"status_code": 3,

"created_at": 1715620000

}Does this row tell a story? No. It requires a human to look up u_id and status_code in two different tables to understand it. If a human can't understand the row in isolation, the AI will fail to learn the pattern.

3. The "Alchemist" Phase (Transformation with dbt)

To fix the "Foreign Key Fog," you must transform that cryptic raw data into a "Denormalized" state—rich, wide tables that contain full context.

We use dbt (data build tool) for this. dbt allows you to write SQL to join these messy tables together and save the result as a new, clean asset. This is where "Data Hygiene" actually happens.

The Fix: Create a dbt model that joins the IDs to their actual meanings. We want to flatten the data so every row contains the full context required to understand it.

-- models/marts/ai_training_set.sql

with orders as (

select * from {{ ref('stg_stripe_orders') }}

),

customers as (

select * from {{ ref('stg_salesforce_customers') }}

)

select

orders.order_id,

-- Hygiene Step 1: Replace ID with Context

customers.first_name,

customers.email,

customers.industry_segment,

-- Hygiene Step 2: Decode "Magic Numbers"

case

when orders.status_code = 1 then 'Pending'

when orders.status_code = 2 then 'Shipped'

when orders.status_code = 3 then 'Refunded'

else 'Unknown'

end as order_status_human_readable,

orders.order_total

from orders

left join customers on orders.customer_id = customers.idThe Result: Now, the data looks like this: "Order 8823 for John Doe (Tech Industry). Status: Refunded."

We have replaced mathematical abstraction with semantic meaning. That is how you prepare data for a model.

4. The "Magic Number" Audit

The most dangerous dirty data isn't a typo; it's an unexplained integer. I recently saw a dataset where the column is_active was 0 or 1, but there was also a column lifecycle_stage with values 10, 20, 30, 99.

Why it hurts AI: The AI will try to find a mathematical relationship between 10 and 20. But often, these numbers are arbitrary labels:

10= "New Lead"20= "Contacted"99= "Banned"

There is no mathematical path from "New Lead" to "Banned," so feeding these raw numbers to the model confuses it.

The Fix: Hard-code your logic in dbt. Never let a "Magic Number" reach your AI. Use your transformation layer to turn 99 into the string "Banned". If the logic lives in your database pipeline, your AI training data remains clean even if the underlying codes change.

Examples in the Wild: The "Human Creativity" Chaos

In my time working with marketing and sales data, I've learned one universal truth: the users of data are constantly engaged in creative sabotage.

The "Naming" Problem: Humans hate consistency. One month, a client is entered as "Lexcorp". The next month, it's "Lex corp." By Q3, it's just "LC". If you are querying for `WHERE client_name = 'Lexcorp'`, you just lost 60% of your data.

The "Channel" Drift: Marketing teams love to invent new shorthand.

- January:

Channel = "Paid Social" - March:

Channel = "ps" - June:

Channel = "pdsoc"

The Result: The "Monthly Fire Drill." Every single month, an engineer (maybe you) has to manually update a CASE WHEN statement in a dbt model to catch these new variations.

The Irony: Organizations call this "Automated Reporting" even though it relies on a human physically editing code every 30 days. Real automation means catching these errors upstream with validation, or using dbt tests to flag "Unknown Values" before they break your dashboard.

The Verdict: Is Your Architecture "Clean"?

Data Hygiene isn't just about regex scripts and removing emojis. It is about architectural empathy. You need to structure your data in a way that respects the way an AI learns.

Before you start your next project, ask these three questions about your data:

- Is it Automated? (Or did a human export a CSV manually?)

- Is it Contextual? (Are we feeding it Names, or IDs?)

- Is it Decoded? (Are we feeding it English words, or status codes?)

If you aren't doing this, you aren't doing Data Science yet. You're just doing Data Entry.

At Ai Prepared, we focus on the final validation—scanning your processed data to ensure it meets the highest standards before it touches your model. But that validation starts with a solid pipeline.